Setup Prometheus and Grafana to scrap Cilium metrics

This will create a new namespace cilium-monitoring

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.15.1/examples/kubernetes/addons/prometheus/monitoring-example.yaml

Some warnings…

namespace/cilium-monitoring created

serviceaccount/prometheus-k8s created

configmap/grafana-config created

configmap/grafana-cilium-dashboard created

configmap/grafana-cilium-operator-dashboard created

configmap/grafana-hubble-dashboard created

configmap/grafana-hubble-l7-http-metrics-by-workload created

configmap/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/grafana created

service/prometheus created

Warning: would violate PodSecurity "restricted:latest": allowPrivilegeEscalation != false (container "grafana-core" must set securityContext.allowPrivilegeEscalation=false), unrestricted capabilities (container "grafana-core" must set securityContext.capabilities.drop=["ALL"]), runAsNonRoot != true (pod or container "grafana-core" must set securityContext.runAsNonRoot=true), seccompProfile (pod or container "grafana-core" must set securityContext.seccompProfile.type to "RuntimeDefault" or "Localhost")

deployment.apps/grafana created

Warning: would violate PodSecurity "restricted:latest": allowPrivilegeEscalation != false (container "prometheus" must set securityContext.allowPrivilegeEscalation=false), unrestricted capabilities (container "prometheus" must set securityContext.capabilities.drop=["ALL"]), runAsNonRoot != true (pod or container "prometheus" must set securityContext.runAsNonRoot=true), seccompProfile (pod or container "prometheus" must set securityContext.seccompProfile.type to "RuntimeDefault" or "Localhost")

deployment.apps/prometheus created

kubectl get all

❯ kubectl get all -n cilium-monitoring

NAME READY STATUS RESTARTS AGE

pod/grafana-6f4755f98c-8c7sg 1/1 Running 0 57s

pod/prometheus-67fdcf4796-vc8hd 1/1 Running 0 57s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana ClusterIP 10.102.147.187 <none> 3000/TCP 57s

service/prometheus ClusterIP 10.104.8.139 <none> 9090/TCP 57s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/grafana 1/1 1 1 57s

deployment.apps/prometheus 1/1 1 1 57s

NAME DESIRED CURRENT READY AGE

replicaset.apps/grafana-6f4755f98c 1 1 1 57s

replicaset.apps/prometheus-67fdcf4796 1 1 1 57s

Change service type to LoadBalancer

❯ kubectl patch svc prometheus -n cilium-monitoring -p '{"spec": {"type": "LoadBalancer"}}'

❯ kubectl patch svc grafana -n cilium-monitoring -p '{"spec": {"type": "LoadBalancer"}}'

Now TYPE is LoadBalancer and EXTERNAL-IP is assigned for each service

❯ kubectl get svc -n cilium-monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana LoadBalancer 10.102.147.187 192.168.0.131 3000:32230/TCP 19m

prometheus LoadBalancer 10.104.8.139 192.168.0.130 9090:31588/TCP 19m

Access Grafana web page

Access Grafana web page

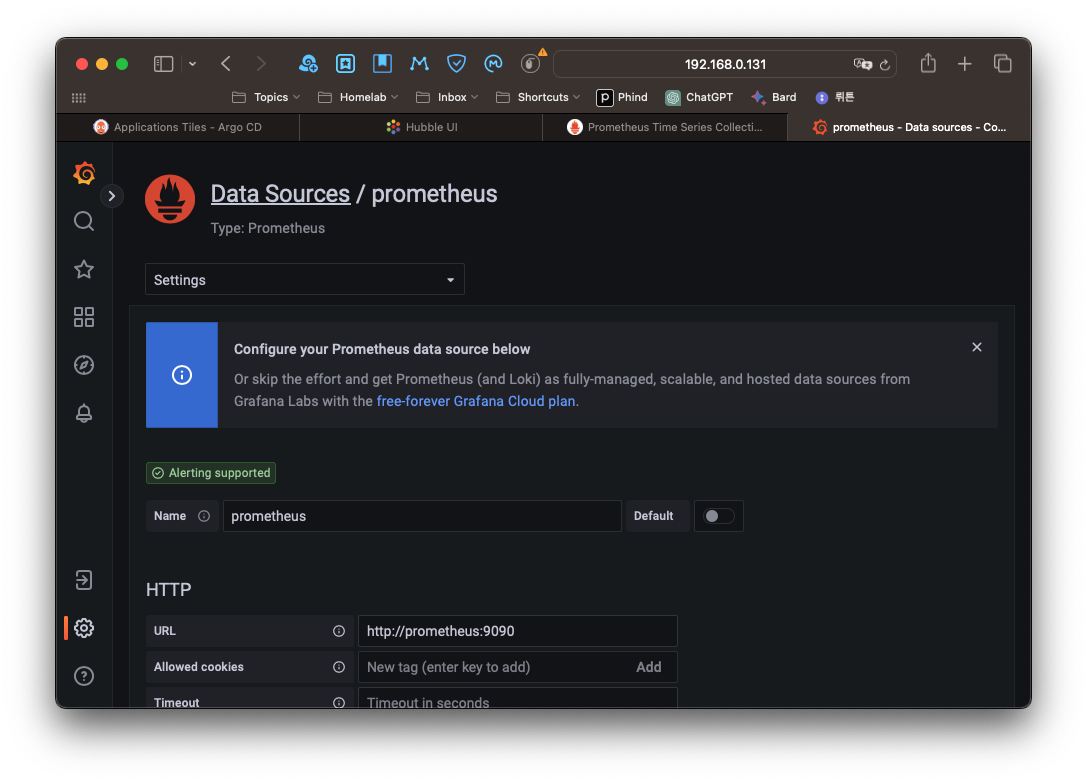

Add Prometheus as a data source

Enable metrics from Cilium and Hubble

Cilium,Hubble, andCilium Operatordo not expose metrics by default.9962,9965, and9963

Enable metrics during installation with helm

Add these options to helm install command

--set prometheus.enabled=true \

--set operator.prometheus.enabled=true \

--set hubble.enabled=true \

--set hubble.metrics.enableOpenMetrics=true \

--set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}"

Update existing Cilium helm release

helm upgrade cilium cilium/cilium --version 1.15.1 \

--namespace kube-system \

--reuse-values \

--set prometheus.enabled=true \

--set operator.prometheus.enabled=true \

--set hubble.enabled=true \

--set hubble.metrics.enableOpenMetrics=true \

--set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}"

Check Prometheus Targets

Some targets are already configured

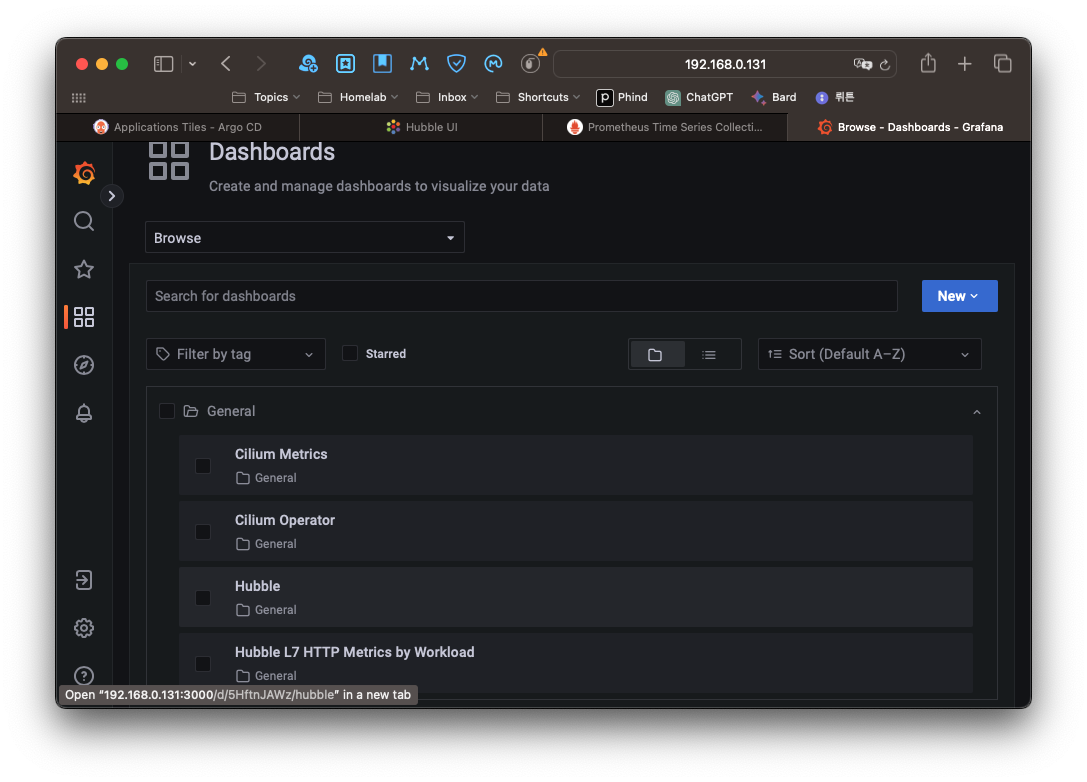

Grafana dashboard for Cilium metrics